Idk why people don’t read the article before commenting.

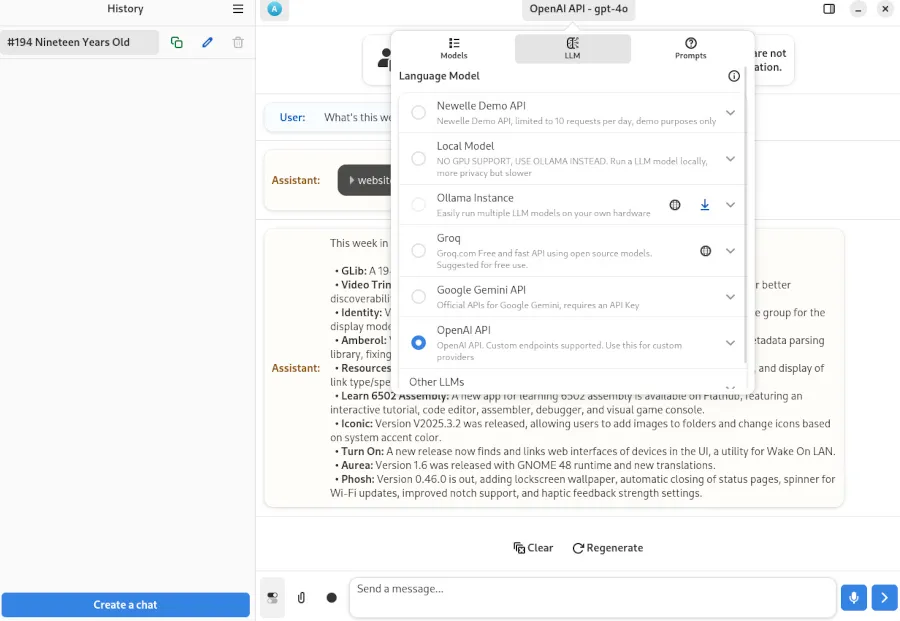

Newelle supports interfacing with the Google Gemini API, the OpenAI API, Groq, and also local large language models (LLMs) or ollama instances for powering this AI assistant.

So you configure it with your prefered model which can include a locally run one. And it seems to be its own package not something built into gnome itself so you an easily uninstall it if you won’t use it.

Seems fine to me. I probably won’t be using it, but it’s an interesting idea. Being able to run terminal commands seems risky though. What if the AI bricks my system? Hopefully they make you confirm every command before it runs any of them or something.

What I’d like to see which is unclear if it would support is a LAN model. I have run ollama models on a desktop, and remotely interfaced with them via ssh before from another computer on the same network. This would be ideal since you can have your own local model on your own network, put it on a powerful, but energy efficient home server, and let it interface with all devices on your network. Rather than each one running their own local model, or using a corporate model.

Or, ORRRR…just do the stuff yourself and don’t further perpetuate this dumbshit until it doesn’t require an entire months worth of energy for an efficient home to run to search “Hentai Alien Tentacle Porn” for you.

Buncha savages.

Big nope from me dawg

For some reason, these local LLMS are straight up stupid. I tried deepseek R1 through ollama and it was straight up stupid and gave everything wrong. Anyone got the same results? I did the 7b and 14b (if I remember these numbers correctly), 32 straight up didn’t install because I didn’t have enough RAM.

I haven’t tested this but TBH as someone who has run Linux at home for 25 years I love the idea of an always alert sysadmin keeping my machine maintained and configured to my specs. Keep my IDS up to date. And so on.

Two requirements:

1 Be an open source local model with no telemetry

2 Let me review proposed changes to my system and explain why they should be made

- That is not what this does

- You can certainly have unattended updates without an LLM in the mix.

Like what do you need to keep configured? lol Linux is set it and forget it. I’ve had installs be fine from day one to year 7. It’s not like windows where Microsoft is constantly changing things and changing your settings. Like it takes minimum effort to keep a Linux server/system going after initial configuration.

Do you use IDCS? If not, why not? Have you taken care of automating encryption and backup to cloud? There’s a new open source shared media server, are you interested in configuring, securing, and testing it?

It’s mostly set and forget, Earth is mostly harmless, etc